学习 langchain

- 学习 LangChain 的官网文档:https://python.langchain.com/docs/tutorials

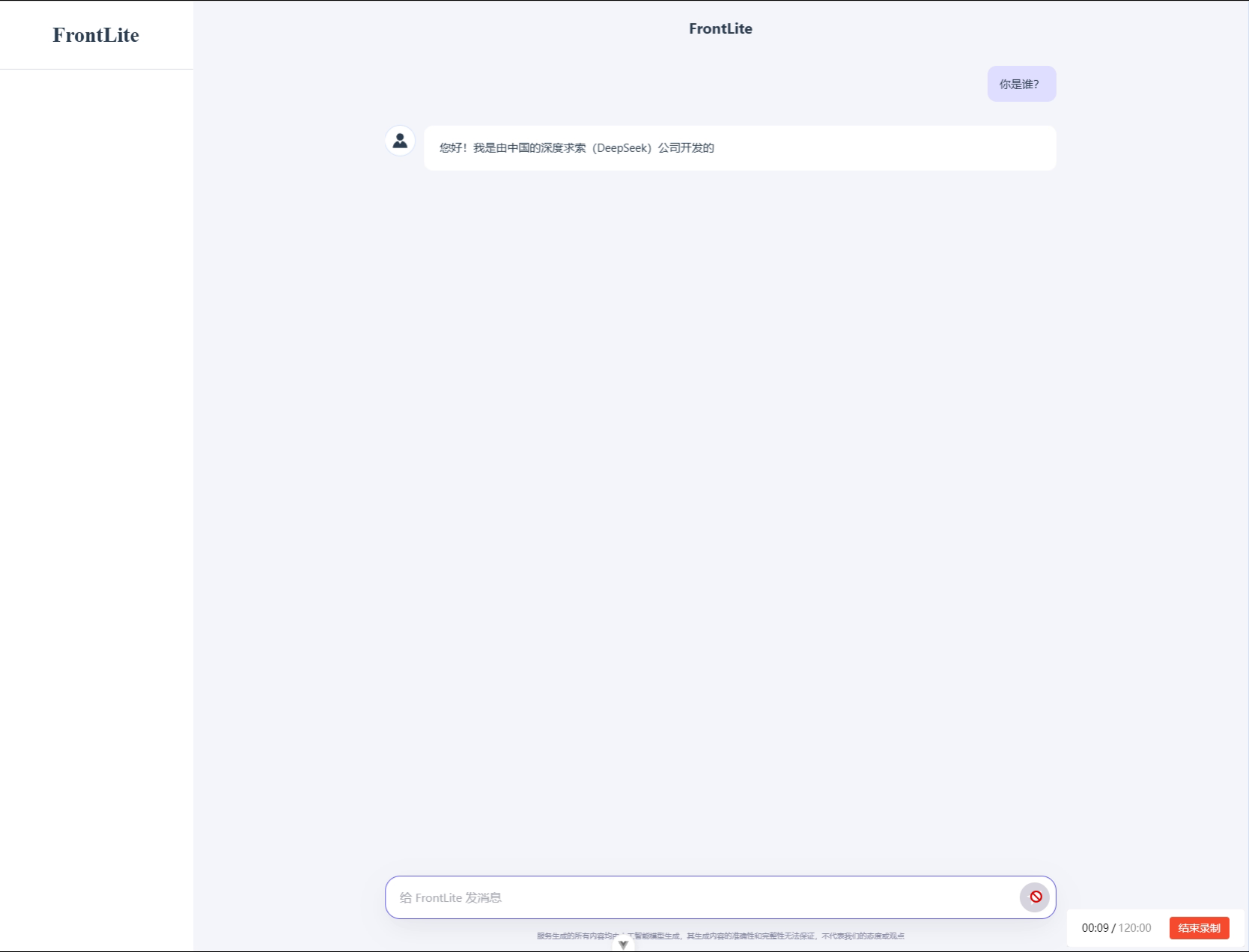

- 仿照DeepSeek和通义千问页面,开发了一个简单的问答系统。

- 体验 小前端助手

一、编程实战

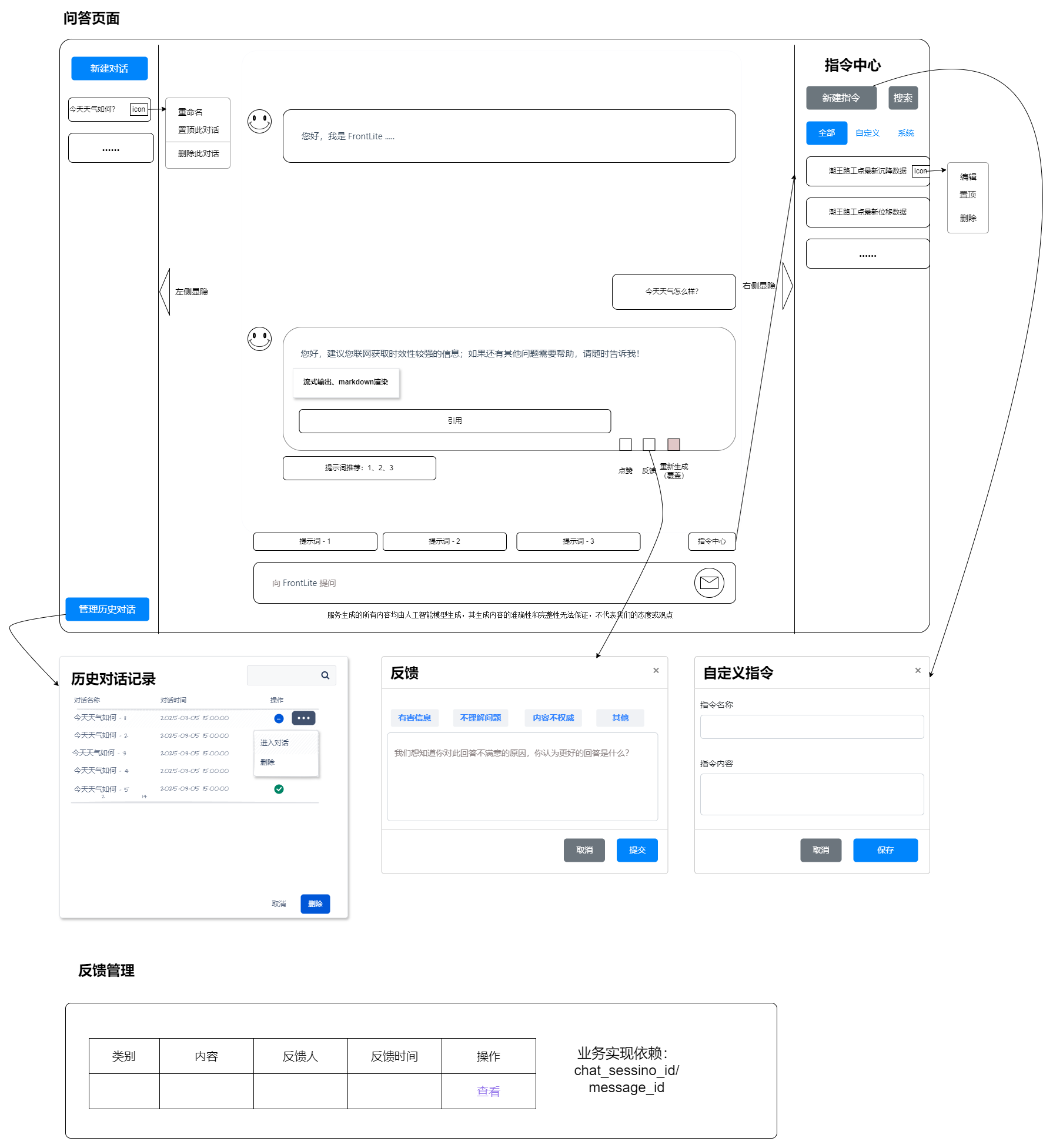

设计

原型

功能

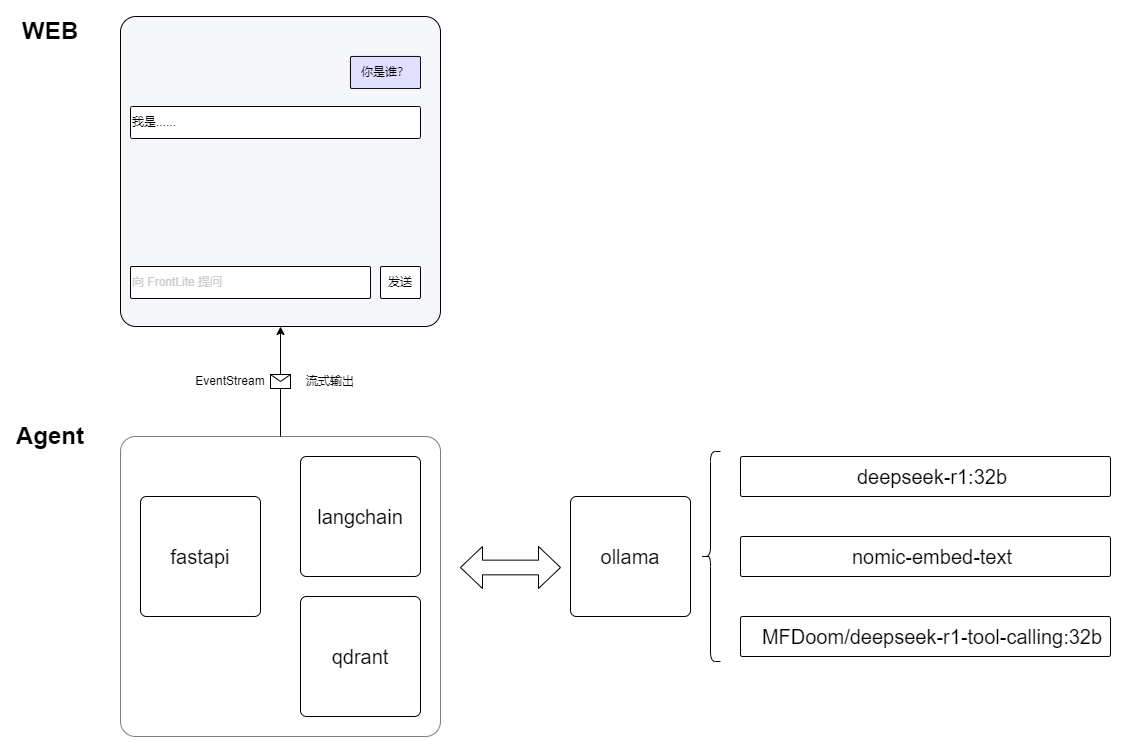

- 使用本地大模型 –> 部署时使用的deep官网api,体验页面额度用完为止

- 前端对话框流式输出 –> 非流式,docker 部署后流式效果消失,未排查

- 单个对话场景中的多轮对话

- 输出内容使用 markdown 渲染

示例

前后端 + 流式输出

20250304

多轮对话

20250305、DeepSeek官网 API

联网搜索

20250306

function-call / tool-calling

回答速度变慢、 出现重复调用 tool-calling 的情况,待优化提示词

技术栈

网站部署

- 部署 python 系统

- nginx 代理需要关闭缓存,proxy_buffering off;

使用 docker 部署后流式输出失效了,不管了一股脑输出吧

总结

20250628

- 学习了 langchain 的基础用法,借助学习langchain 了解了许多大模型相关概念,点亮了许多技能;

- 实际开发过程中,Java 生态更适合工程化落地,有一些优秀的框架和项目可参考:

- langchain4j:java 版本的langchain,版本快速迭代中。

- langchat:一个基于 langchain4j 框架功能完整的智能问答项目,推荐学习项目实战和设计思路。

二、官网学习

编程环境

练习使用的环境,请实际环境调整

- 编程环境:anaconda、pycharm

- 模型环境:在线 - DeepSeek API;离线 - Ollama部署模型

- 中间件

- qdrant:部署向量数据库,对外提供服务。

- 当前LangChain版本是 v0.3,感觉 v0.2 的文档更好理解

环境变量

方便管理环境变量

- 安装依赖

pip install python-dotenv - 新建 .env 文件

# OLLAMA OLLAMA_MODEL = "deepseek-r1:32b" OLLAMA_MODEL_TOOL_CALLING = "MFDoom/deepseek-r1-tool-calling:32b" OLLAMA_EMBEDDING_MODEL = "nomic-embed-text" OLLAMA_API_KEY="SK-AAA" OLLAMA_BASE_URL="http://172.25.65.161:11434" # DeepSeek DeepSeek_MODEL = "deepseek-chat" DeepSeek_API_KEY = "替换成 DeepSeek 上申请的 API KEY" DeepSeek_BASE_URL = "https://api.deepseek.com" # qdrant QDRANT_URL = "http://172.26.31.84:6333" QDRANT_COLLECTION_NAME = "qdrant_dev" - 编写加载工具 load_env

也可在使用时直接粘贴这两行代码

# 加载环境变量

from dotenv import load_dotenv

load_dotenv()Get started

llm_chain

- 教程地址:https://python.langchain.com/docs/tutorials/llm_chain/

- 教程目的:langchain的简单入门,使用 prompt template 和 chat models 实现一个翻译器。

安装包

pip install langchain pip install langchain-openai代码

- 使用提示词工具类:提示词即字符串;llm的输入是纯字符串,chat llm 的输入是json结构,包含了role、content等字段,其中content是纯字符串;langchain封装了一系列提示词工具类;

- 初始化模型:通过 ollama 来访问本地部署的大模型;通过 DeepSeek API 来使用DeepSeek官方服务。

# Build a simple LLM application with chat models and prompt templates

import load_env

import os

from langchain_ollama.chat_models import ChatOllama

from langchain_core.prompts import ChatPromptTemplate

# 方式一: 调用本地 ollama 模型

ollama_model = ChatOllama(

model=os.getenv("OLLAMA_MODEL"),

base_url=os.getenv("OLLAMA_BASE_URL"),

temperature=0,

)

# 提示词模板

system_template = "Just translate the following from English into {language}"

prompt_template = ChatPromptTemplate.from_messages(

[("system", system_template), ("user", "{text}")]

)

prompt = prompt_template.invoke(

{"language": "中文", "text": "Build a simple LLM application with chat models and prompt templates"})

# print(f"---> 提示词模板: {prompt}")

print(f"---> 调用本地 ollama 模型: {ollama_model.invoke(prompt).content}")

# 方式二: 调用 DeepSeek API

from langchain_openai import ChatOpenAI

openai_model = ChatOpenAI(

model_name=os.getenv("DeepSeek_MODEL"),

openai_api_key=os.getenv("DeepSeek_API_KEY"),

openai_api_base=os.getenv("DeepSeek_BASE_URL"),

temperature=0

)

print(f"---> 调用DeepSeek API: {openai_model.invoke(prompt).content}")- 输出

---> 调用本地 ollama 模型: <think> Alright, I need to help the user build a simple LLM application using chat models and prompt templates. First, I'll outline the main components they'll need: a chat model API like OpenAI's GPT-3 or similar, and some way to handle prompts. I should start by explaining how to set up the environment. They'll probably need Python, so I'll suggest installing necessary libraries like `openai` for interacting with the API. Next, I'll walk them through getting an API key from OpenAI and setting it up securely. Then, I'll move on to creating prompt templates. It's important to structure these clearly so that the model understands what's being asked. Maybe provide examples of different types of prompts, like question-answering or summarization. After that, I'll cover how to send requests to the API using the prepared prompts. I'll include code snippets showing how to format the request and handle the response. It might be helpful to mention error handling here too, in case there are issues with the API call. I should also think about user interaction. How will they input their queries? Maybe through a simple command-line interface or a web form if they want to make it more interactive. I'll suggest building a basic UI so users can easily interact with the application. Testing is another crucial step. I'll advise them to test different prompts and scenarios to ensure the model responds as expected. They might need to tweak their prompts based on initial results to improve accuracy or relevance. Finally, I'll touch on deployment options. If they want others to use their app, they could deploy it on a cloud platform like Heroku or AWS. Alternatively, if it's just for personal use, running it locally might be sufficient. Throughout the process, I'll make sure to keep the instructions clear and straightforward, avoiding overly technical jargon so that even someone new to LLMs can follow along easily. </think> 使用聊天模型和提示模板构建一个简单的LLM应用程序 ---> 调用DeepSeek API: 构建一个简单的LLM应用程序,使用聊天模型和提示模板。

retrievers

- 教程地址:https://python.langchain.com/docs/tutorials/retrievers/

- 教程目的:向量数据库检索,解析pdf文件 –> 存入向量数据库 –> 查询向量数据(直接查询、构造检索器查询)

- 向量数据

qdrant 可使用 docker-compose.yml 部署,部署后先创建相关 collect_name 再执行代码version: '3.1' services: qdrant: container_name: qdrant image: 'qdrant/qdrant' restart: always ports: - "6333:6333" - "6334:6334" volumes: - ./qdrant:/qdrant/storage - 代码

# Build a semantic search engine import load_env import os # 加载PDF from langchain_community.document_loaders import PyPDFLoader from langchain_text_splitters import RecursiveCharacterTextSplitter file_path = "./data/pdf/nke-10k-2023.pdf" loader = PyPDFLoader(file_path) docs = loader.load() print("\n========== Loading documents ==========") print(f"docs:{len(docs)}") # print(f"{docs[0].page_content[:200]}\n") # print(f"metadata:{docs[0].metadata}") # 递归分割 text_splitter = RecursiveCharacterTextSplitter( chunk_size=1000, chunk_overlap=200, add_start_index=True ) all_splits = text_splitter.split_documents(docs) print("\n========== Splitting ==========") print(f"all_splits : {len(all_splits)}") # 向量数据库 from langchain_ollama import OllamaEmbeddings from langchain_qdrant import QdrantVectorStore embeddings = OllamaEmbeddings(model=os.getenv("OLLAMA_EMBEDDING_MODEL"), base_url=os.getenv("OLLAMA_BASE_URL")) vector_store = QdrantVectorStore.from_existing_collection( collection_name=os.getenv("QDRANT_COLLECTION_NAME"), embedding=embeddings, url=os.getenv("QDRANT_URL"), ) ids = vector_store.add_documents(documents=all_splits) print("\n========== 向量搜索 ==========") results = vector_store.similarity_search( "How many distribution centers does Nike have in the US?" ) print(f"usage 1: similarity_search: {results[0]}") results = vector_store.similarity_search_with_score("What was Nike's revenue in 2023?") doc, score = results[0] print(f"\nusage 2: similarity_search_with_score: {score}") embedding = embeddings.embed_query("How were Nike's margins impacted in 2023?") results = vector_store.similarity_search_by_vector(embedding) print(f"\nusage 3: similarity_search_by_vector: {results[0]}") # 检索器 # from typing import List # from langchain_core.documents import Document # from langchain_core.runnables import chain # # # @chain # def retriever(query: str) -> List[Document]: # return vector_store.similarity_search(query, k=1) # # # print("\n========== 自定义检索 ==========") # # print(retriever.batch( # [ # "How many distribution centers does Nike have in the US?", # "When was Nike incorporated?", # ], # )) print("\n========== 向量数据自带的检索 ==========") retriever = vector_store.as_retriever( search_type="similarity", search_kwargs={"k": 1}, ) print(retriever.batch( [ "How many distribution centers does Nike have in the US?", "When was Nike incorporated?", ], ))

classification

- 教程地址:https://python.langchain.com/docs/tutorials/classification/

- 教程目的:结构化输出

模型选择

- 使用 ollama 调用本地 DeepSeek 或者 DeepSeek API 都无法完成该教程,换了个模型可以跑通: ollama + qwq

- 若需使用DeekSeek完成结构化输出,可参考DeepSeek 官网提供的 JSON Ouput 示例。

代码

# Classify Text into Labels import load_env import os from langchain_ollama.chat_models import ChatOllama from langchain_core.prompts import ChatPromptTemplate from pydantic import BaseModel, Field tagging_prompt = ChatPromptTemplate.from_template( """ Extract the desired information from the following passage. Only extract the properties mentioned in the 'Classification' function. Passage: {input} """ ) class Classification(BaseModel): sentiment: str = Field(description="The sentiment of the text") aggressiveness: int = Field( description="How aggressive the text is on a scale from 1 to 10" ) language: str = Field(description="The language the text is written in") model = ChatOllama( model="qwq:latest", base_url=os.getenv("OLLAMA_BASE_URL"), temperature=0, ).with_structured_output(Classification) inp = "Estoy increiblemente contento de haberte conocido! Creo que seremos muy buenos amigos!" prompt = tagging_prompt.invoke({"input": inp}) response = model.invoke(prompt) print("\n========== QwQ 格式化输出 ==========") print(response)

extraction

- 教程地址:https://python.langchain.com/docs/tutorials/extraction/

- 教程目的:结构化抽取数据,并手动添加 tool-call 消息

- 这个教程没有跑通

Orchestration

chatbot

- 教程地址:https://python.langchain.com/docs/tutorials/chatbot/

- 教程目的:聊天机器人示例

计算 token 消耗

代码(LangChain)

消息管理部分还没测试明白

# Build a Chatbot

import load_env

import os

# 创建模型

from langchain_openai import ChatOpenAI

model = ChatOpenAI(

model_name=os.getenv("DeepSeek_MODEL"),

openai_api_key=os.getenv("DeepSeek_API_KEY"),

openai_api_base=os.getenv("DeepSeek_BASE_URL"),

temperature=0

)

# 添加消息历史

from langchain_core.chat_history import BaseChatMessageHistory, InMemoryChatMessageHistory

from langchain_core.runnables.history import RunnableWithMessageHistory

from langchain_core.messages import HumanMessage

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from operator import itemgetter

from langchain_core.runnables import RunnablePassthrough

# 添加消息历史控制 -- 自定义 token 计算

from transformers import AutoTokenizer

from typing import Sequence, List

from langchain_core.messages import trim_messages, BaseMessage, AIMessage, SystemMessage, ToolMessage

chat_tokenizer_dir = "./chat_tokenizer_dir"

tokenizer = AutoTokenizer.from_pretrained(

chat_tokenizer_dir, trust_remote_code=True

)

def str_token_counter(text: str) -> int:

tokens = tokenizer.encode(text)

return len(tokens)

def tiktoken_counter(messages: List[BaseMessage]) -> int:

num_tokens = 3 # every reply is primed with <|start|>assistant<|message|>

tokens_per_message = 3

tokens_per_name = 1

for msg in messages:

if isinstance(msg, HumanMessage):

role = "user"

elif isinstance(msg, AIMessage):

role = "assistant"

elif isinstance(msg, ToolMessage):

role = "tool"

elif isinstance(msg, SystemMessage):

role = "system"

else:

raise ValueError(f"Unsupported messages type {msg.__class__}")

num_tokens += (

tokens_per_message

+ str_token_counter(role)

+ str_token_counter(msg.content)

)

if msg.name:

num_tokens += tokens_per_name + str_token_counter(msg.name)

return num_tokens

trimmer = trim_messages(

max_tokens=65,

strategy="last",

token_counter=tiktoken_counter,

include_system=True,

allow_partial=False,

start_on="human",

)

store = {}

def get_session_history(session_id: str) -> BaseChatMessageHistory:

if session_id not in store:

store[session_id] = InMemoryChatMessageHistory()

return store[session_id]

config = {"configurable": {"session_id": "abc2"}}

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a helpful assistant. Answer all questions to the best of your ability in {language}.",

),

MessagesPlaceholder(variable_name="messages"),

]

)

chain = (

RunnablePassthrough.assign(messages=itemgetter("messages") | trimmer)

| prompt

| model

)

# input_messages_key 用来置顶需要保存的聊天历史

with_message_history = RunnableWithMessageHistory(chain, get_session_history, input_messages_key="messages")

# 预设历史消息

messages = [

SystemMessage(content="you're a good assistant"),

HumanMessage(content="hi! I'm bob"),

AIMessage(content="hi!"),

HumanMessage(content="I like vanilla ice cream"),

AIMessage(content="nice"),

HumanMessage(content="whats 2 + 2"),

AIMessage(content="4"),

HumanMessage(content="thanks"),

AIMessage(content="no problem!"),

HumanMessage(content="having fun?"),

AIMessage(content="yes!"),

]

history = get_session_history("abc2")

history.add_messages(messages)

print("\n========== Managing Conversation History ==========")

response = with_message_history.invoke(

{

"messages": [HumanMessage(content="whats my name?")],

"language": "English",

},

config=config,

)

print(f"response 1: {response}")

response = with_message_history.invoke(

{

"messages": [HumanMessage(content="what math problem did i ask?")],

"language": "English",

},

config=config,

)

print(f"response 2: {response}")- 代码(LangGraph)

from abc import ABC import load_env from langchain_core.messages import HumanMessage from langchain_core.messages import AIMessage from langgraph.checkpoint.memory import MemorySaver from langgraph.graph import START, MessagesState, StateGraph from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder from typing import Sequence, List from langchain_core.messages import BaseMessage from langgraph.graph.message import add_messages from typing_extensions import Annotated, TypedDict from langchain_core.messages import SystemMessage, trim_messages # 加载模型 from langchain_ollama.chat_models import ChatOllama import os model = ChatOllama( model=os.getenv("OLLAMA_MODEL"), base_url=os.getenv("OLLAMA_BASE_URL"), temperature=0, ) # Managing Conversation History from transformers import AutoTokenizer from langchain_core.messages import BaseMessage, ToolMessage chat_tokenizer_dir = "./chat_tokenizer_dir" tokenizer = AutoTokenizer.from_pretrained( chat_tokenizer_dir, trust_remote_code=True ) def str_token_counter(text: str) -> int: tokens = tokenizer.encode(text) return len(tokens) def tiktoken_counter(messages: List[BaseMessage]) -> int: num_tokens = 3 # every reply is primed with <|start|>assistant<|message|> tokens_per_message = 3 tokens_per_name = 1 for msg in messages: if isinstance(msg, HumanMessage): role = "user" elif isinstance(msg, AIMessage): role = "assistant" elif isinstance(msg, ToolMessage): role = "tool" elif isinstance(msg, SystemMessage): role = "system" else: raise ValueError(f"Unsupported messages type {msg.__class__}") num_tokens += ( tokens_per_message + str_token_counter(role) + str_token_counter(msg.content) ) if msg.name: num_tokens += tokens_per_name + str_token_counter(msg.name) return num_tokens trimmer = trim_messages( max_tokens=65, strategy="last", token_counter=tiktoken_counter, include_system=True, allow_partial=False, start_on="human", ) messages = [ SystemMessage(content="you're a good assistant"), HumanMessage(content="hi! I'm bob"), AIMessage(content="hi!"), HumanMessage(content="I like vanilla ice cream"), AIMessage(content="nice"), HumanMessage(content="whats 2 + 2"), AIMessage(content="4"), HumanMessage(content="thanks"), AIMessage(content="no problem!"), HumanMessage(content="having fun?"), AIMessage(content="yes!"), ] print("\n========== Managing Conversation History ==========") print(trimmer.invoke(messages)) class State(TypedDict): messages: Annotated[Sequence[BaseMessage], add_messages] language: str prompt_template = ChatPromptTemplate.from_messages( [ ( "system", "You are a helpful assistant. Answer all questions to the best of your ability in {language}.", ), MessagesPlaceholder(variable_name="messages"), ] ) def call_model(state: State): trimmed_messages = trimmer.invoke(state["messages"]) prompt = prompt_template.invoke( {"messages": trimmed_messages, "language": state["language"]} ) response = model.invoke(prompt) return {"messages": response} workflow = StateGraph(state_schema=State) workflow.add_edge(START, "model") workflow.add_node("model", call_model) app = workflow.compile(checkpointer=MemorySaver()) config = {"configurable": {"thread_id": "abc678"}} query = "What math problem did I ask?" language = "English" input_messages = messages + [HumanMessage(query)] output = app.invoke( {"messages": input_messages, "language": language}, config, ) output["messages"][-1].pretty_print()

agents

- 教程地址:https://python.langchain.com/docs/tutorials/agents/

- 教程目的:搜索Agent示例,使用 langchain 的 agent 模块实现